In this article, we will create a k3s cluster in a private subnet and an nginx load balancer in a public subnet using terraform.

- Creating VPCs and Private Subnets: We’ll create one VPC and configure dedicated public and private subnets.

- Deploying EC2 Instances: We’ll launch three EC2 instances, one will be the k3s master node, another will be the k3s worker node and these will be launched from private subnet, and the third will be the nginx load balancer from public subnet.

- Implementing NAT Gateways and Public Subnets: We’ll set up NAT gateways and public subnets to facilitate outbound internet access for the private subnet.

- Installing nginx Server: We’ll install and configure an nginx web server on an EC2 instance which will act as the load balancer in the public subnet.

- k3s Clustering: We’ll install and configure k3s on the k3s master node and worker nodes.

- POD and Service Object manifests: We’ll create a POD and Service object manifests with replicas set to 2 and nodePort set to 30080.

- Terraform Configuration: We’ll create a terraform configuration file to create VPCs, subnets, security groups, instances, and route tables and finally clustering using k3s.

Table of contents

Open Table of contents

- Configure AWS CLI

- Generate SSH key pair

- Set Up Terraform Configuration

- Define Generic Variables

- Define VPC and nginx load balancer output values

- Initialize Terraform

- Validate Terraform Configuration

- Plan Terraform Configuration

- Apply Terraform Configuration

- Login to AWS console and check the VPC, subnets, security groups, and route tables

- Login to public nginx EC2 instance via SSH

- Copy ssh key to nginx EC2 instance for access to k3s master node

- Login to k3s master node via SSH and test

kubectlcommands - Access the NGINX load balancer using public IP

- Destroy the Infrastructure

Configure AWS CLI

Before configuring AWS CLI, let’s install it first.

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/installNow, let’s configure AWS CLI.

aws configureGenerate SSH key pair

Before we start, let’s generate an SSH key pair.

ssh-keygen -t rsa -b 2048 -f ~/.ssh/web_key -N ""This will create two files: web_key (private key) and web_key.pub (public key) in your .ssh directory. To securely access your EC2 instance, you’ll need an SSH key pair.

Set Up Terraform Configuration

Create main.tf and edit the file as follows

Configure the AWS Provider

provider "aws" {

region = var.aws_region

}Now use the ssh key pair for the creation of your EC2 instance

resource "aws_key_pair" "web_key" {

key_name = "web_key"

public_key = file("~/.ssh/web_key.pub")

}After that, we’ll create a VPC, subnets, Internet Gateway, NAT Gateway, Route Tables, Security Groups, and finally EC2 instances.

# VPC

resource "aws_vpc" "main" {

cidr_block = var.vpc_cidr

enable_dns_hostnames = true

enable_dns_support = true

tags = {

Name = "${var.cluster_name}-vpc"

}

}

# Public Subnet

resource "aws_subnet" "public" {

vpc_id = aws_vpc.main.id

cidr_block = var.public_subnet_cidr

availability_zone = "${var.aws_region}a"

map_public_ip_on_launch = true

tags = {

Name = "${var.cluster_name}-public"

}

}

# Private Subnet

resource "aws_subnet" "private" {

vpc_id = aws_vpc.main.id

cidr_block = var.private_subnet_cidr

availability_zone = "${var.aws_region}a"

tags = {

Name = "${var.cluster_name}-private"

}

}

# Internet Gateway

resource "aws_internet_gateway" "main" {

vpc_id = aws_vpc.main.id

tags = {

Name = "${var.cluster_name}-igw"

}

}

# NAT Gateway

resource "aws_eip" "nat" {

domain = "vpc"

}

resource "aws_nat_gateway" "main" {

allocation_id = aws_eip.nat.id

subnet_id = aws_subnet.public.id

tags = {

Name = "${var.cluster_name}-nat"

}

}

# Route Tables

resource "aws_route_table" "public" {

vpc_id = aws_vpc.main.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.main.id

}

tags = {

Name = "${var.cluster_name}-public-rt"

}

}

resource "aws_route_table" "private" {

vpc_id = aws_vpc.main.id

route {

cidr_block = "0.0.0.0/0"

nat_gateway_id = aws_nat_gateway.main.id

}

tags = {

Name = "${var.cluster_name}-private-rt"

}

}

# Route Table Associations

resource "aws_route_table_association" "public" {

subnet_id = aws_subnet.public.id

route_table_id = aws_route_table.public.id

}

resource "aws_route_table_association" "private" {

subnet_id = aws_subnet.private.id

route_table_id = aws_route_table.private.id

}

# Security Groups

resource "aws_security_group" "k3s" {

name = "${var.cluster_name}-k3s"

description = "K3s cluster security group"

vpc_id = aws_vpc.main.id

ingress {

from_port = 6443

to_port = 6443

protocol = "tcp"

cidr_blocks = [aws_vpc.main.cidr_block]

}

ingress {

from_port = 10250

to_port = 10250

protocol = "tcp"

cidr_blocks = [aws_vpc.main.cidr_block]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 30080

to_port = 30080

protocol = "tcp"

cidr_blocks = [aws_vpc.main.cidr_block]

}

tags = {

Name = "k3s-cluster-sg"

}

}

resource "aws_security_group" "nginx" {

name = "${var.cluster_name}-nginx"

description = "NGINX load balancer security group"

vpc_id = aws_vpc.main.id

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "k3s-nginx-sg"

}

}

# Data source for Ubuntu AMI

data "aws_ami" "ubuntu" {

most_recent = true

owners = ["099720109477"] # Canonical

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-jammy-22.04-amd64-server-*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

}

# Random token for K3s

resource "random_password" "k3s_token" {

length = 32

special = false

}

# K3s Server Instance and create the deployment and service objects

resource "aws_instance" "k3s_server" {

ami = data.aws_ami.ubuntu.id

instance_type = var.instance_type

subnet_id = aws_subnet.private.id

vpc_security_group_ids = [aws_security_group.k3s.id]

key_name = aws_key_pair.web_key.key_name

user_data = <<-EOF

#!/bin/bash

# Install K3s

curl -sfL https://get.k3s.io | sh -s - server \

--token=${random_password.k3s_token.result} \

--disable-cloud-controller \

--disable servicelb \

--tls-san=$(curl -s http://169.254.169.254/latest/meta-data/local-ipv4)

# Wait for K3s to be ready

while ! sudo kubectl get nodes; do

echo "Waiting for K3s to be ready..."

sleep 5

done

# Create the deployment YAML

cat > /home/ubuntu/k3s-app.yml <<'EOY'

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

command:

- /bin/sh

- -c

- |

echo "Hello from Pod $(hostname)" > /usr/share/nginx/html/index.html

exec nginx -g 'daemon off;'

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

type: NodePort

selector:

app: nginx

ports:

- port: 80

targetPort: 80

nodePort: 30080

EOY

# Set proper ownership

chown ubuntu:ubuntu /home/ubuntu/k3s-app.yml

# Apply the configuration

sudo kubectl apply -f /home/ubuntu/k3s-app.yml

# Wait for pods to be ready

while [[ $(sudo kubectl get pods -l app=nginx -o 'jsonpath={..status.conditions[?(@.type=="Ready")].status}') != "True True" ]]; do

echo "Waiting for pods to be ready..."

sleep 5

done

echo "K3s deployment completed!"

EOF

tags = {

Name = "${var.cluster_name}-master"

}

}

# K3s Worker Node Instance

resource "aws_instance" "k3s_worker" {

ami = data.aws_ami.ubuntu.id

instance_type = var.instance_type

subnet_id = aws_subnet.private.id

vpc_security_group_ids = [aws_security_group.k3s.id]

user_data = <<-EOF

#!/bin/bash

curl -sfL https://get.k3s.io | K3S_URL=https://${aws_instance.k3s_server.private_ip}:6443 \

K3S_TOKEN=${random_password.k3s_token.result} \

sh -

EOF

depends_on = [aws_instance.k3s_server]

tags = {

Name = "${var.cluster_name}-worker"

}

}

# Update security group to allow communication between nodes

resource "aws_security_group_rule" "k3s_node_communication" {

type = "ingress"

from_port = 0

to_port = 0

protocol = "-1"

source_security_group_id = aws_security_group.k3s.id

security_group_id = aws_security_group.k3s.id

description = "Allow all internal traffic between K3s nodes"

}

# NGINX Load Balancer Instance and install nginx server

resource "aws_instance" "nginx" {

ami = data.aws_ami.ubuntu.id

instance_type = var.instance_type

subnet_id = aws_subnet.public.id

vpc_security_group_ids = [aws_security_group.nginx.id]

key_name = aws_key_pair.web_key.key_name

user_data = <<-EOF

#!/bin/bash

apt-get update

apt-get install -y nginx

# Remove default nginx site

rm -f /etc/nginx/sites-enabled/default

# Configure NGINX as reverse proxy

cat > /etc/nginx/conf.d/default.conf <<'EOC'

upstream k3s_nodes {

server ${aws_instance.k3s_server.private_ip}:30080;

server ${aws_instance.k3s_worker.private_ip}:30080;

}

server {

listen 80 default_server;

server_name _;

location / {

proxy_pass http://k3s_nodes;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Add timeouts

proxy_connect_timeout 60s;

proxy_read_timeout 60s;

}

# Simple status page

location /status {

return 200 '{"status": "up", "backend_nodes": ["${aws_instance.k3s_server.private_ip}", "${aws_instance.k3s_worker.private_ip}"]}';

add_header Content-Type application/json;

}

}

EOC

# Restart NGINX

systemctl restart nginx

EOF

tags = {

Name = "${var.cluster_name}-nginx"

}

}Define Generic Variables

### variables.tf

variable "aws_region" {

description = "AWS region"

type = string

default = "ap-southeast-1"

}

variable "vpc_cidr" {

description = "CIDR block for VPC"

type = string

default = "10.0.0.0/16"

}

variable "public_subnet_cidr" {

description = "CIDR block for public subnet"

type = string

default = "10.0.1.0/24"

}

variable "private_subnet_cidr" {

description = "CIDR block for private subnet"

type = string

default = "10.0.2.0/24"

}

variable "cluster_name" {

description = "Name of the K3s cluster"

type = string

default = "k3s-cluster"

}

variable "instance_type" {

description = "EC2 instance type"

type = string

default = "t2.micro"

}Define VPC and nginx load balancer output values

# outputs.tf

output "nginx_public_ip" {

value = aws_instance.nginx.public_ip

}

output "nginx_url" {

value = "http://${aws_instance.nginx.public_ip}"

}

output "k3s_master_private_ip" {

value = aws_instance.k3s_server.private_ip

}

output "k3s_worker_private_ip" {

value = aws_instance.k3s_worker.private_ip

}Initialize Terraform

terraform initValidate Terraform Configuration

terraform validatePlan Terraform Configuration

terraform planApply Terraform Configuration

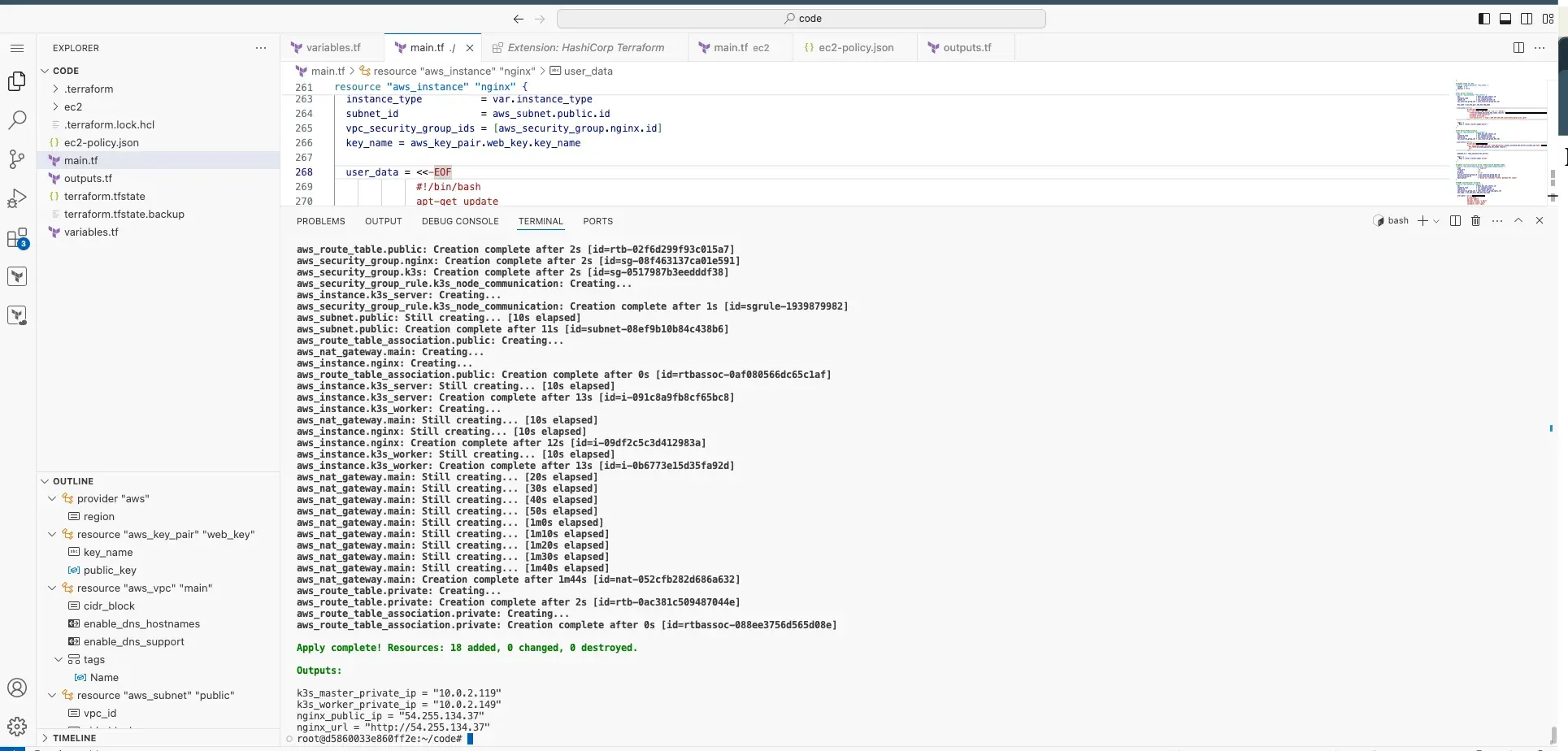

terraform apply -auto-approve

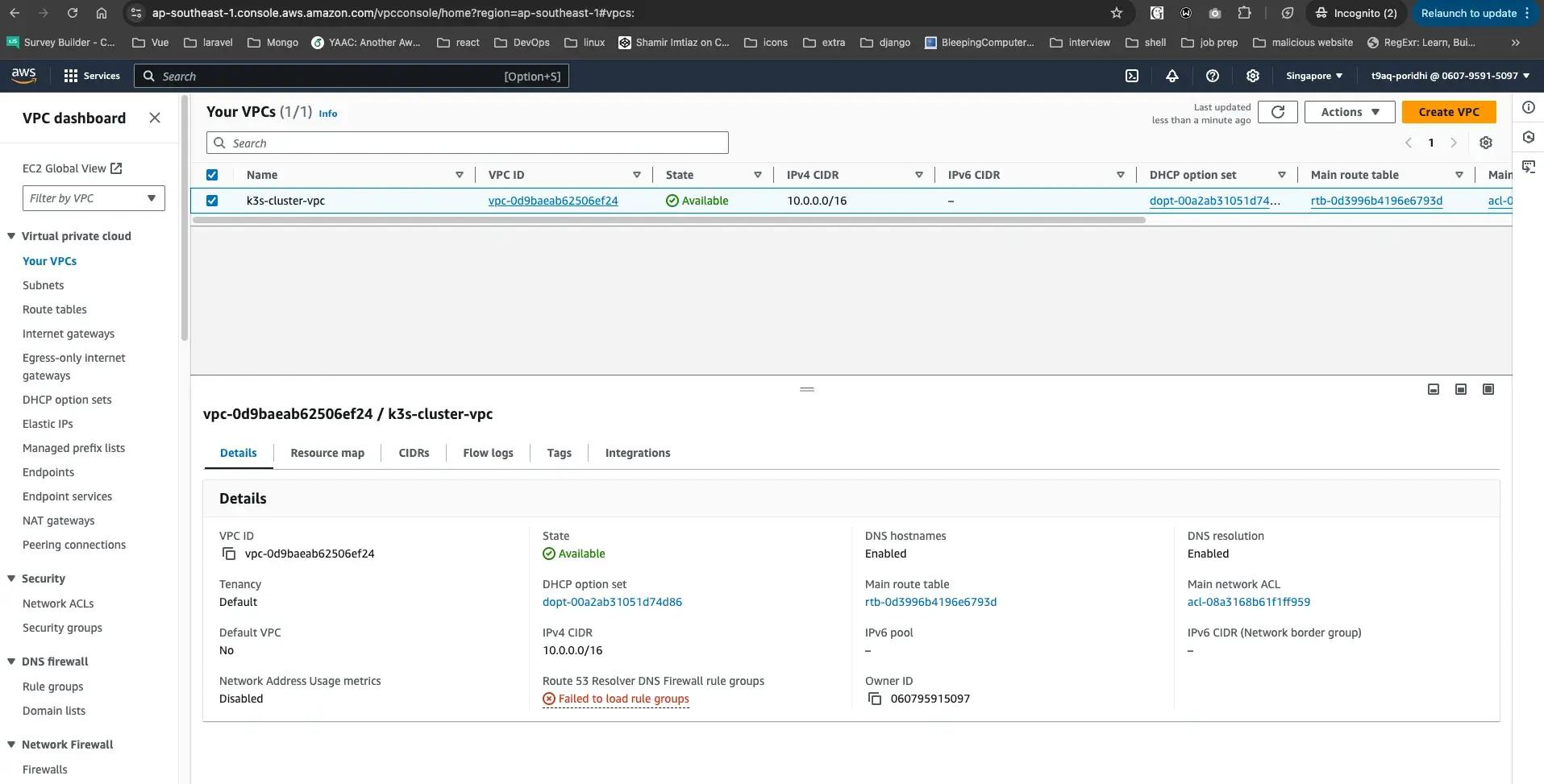

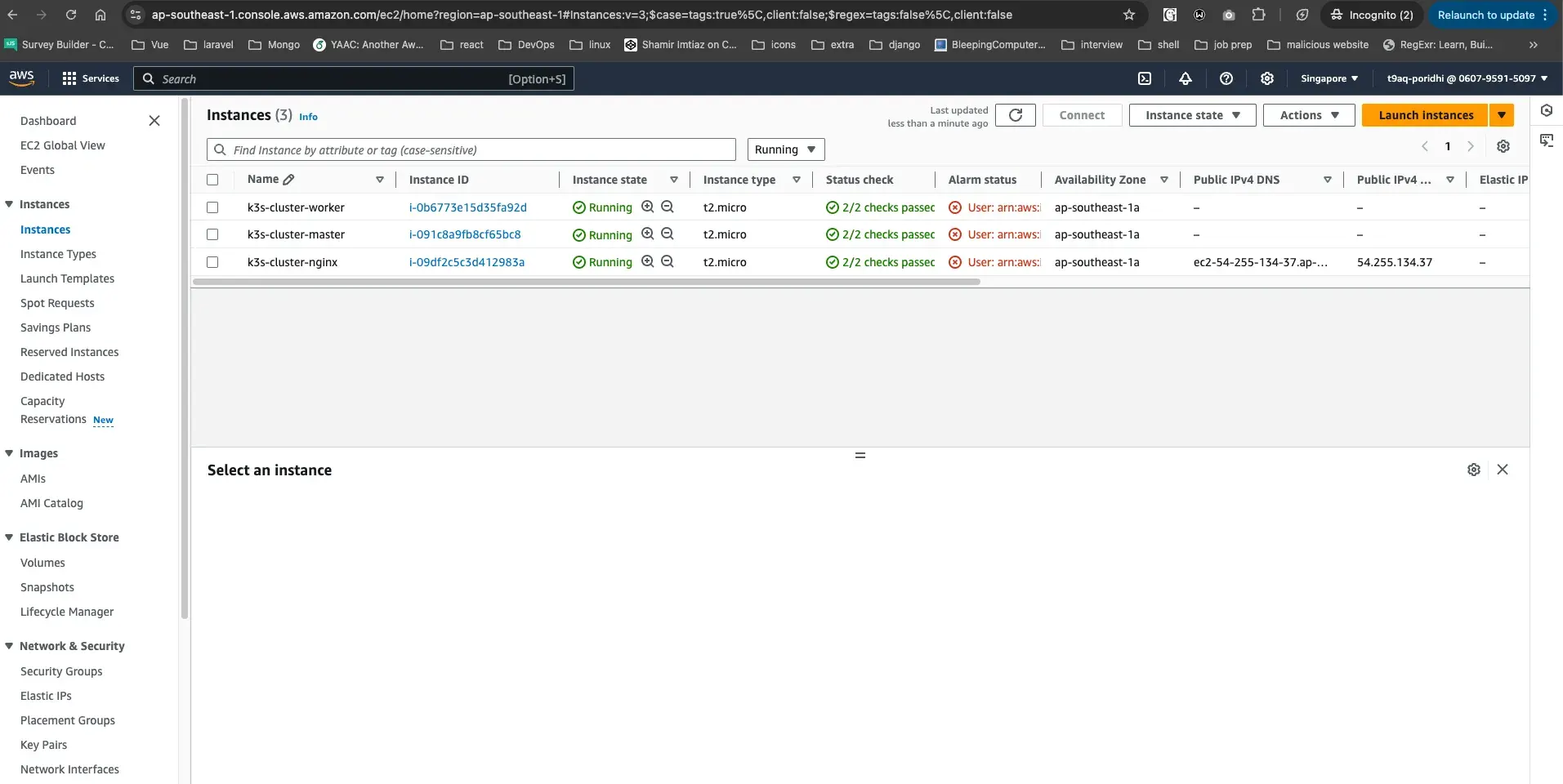

Login to AWS console and check the VPC, subnets, security groups, and route tables

VPC

EC2 Instances

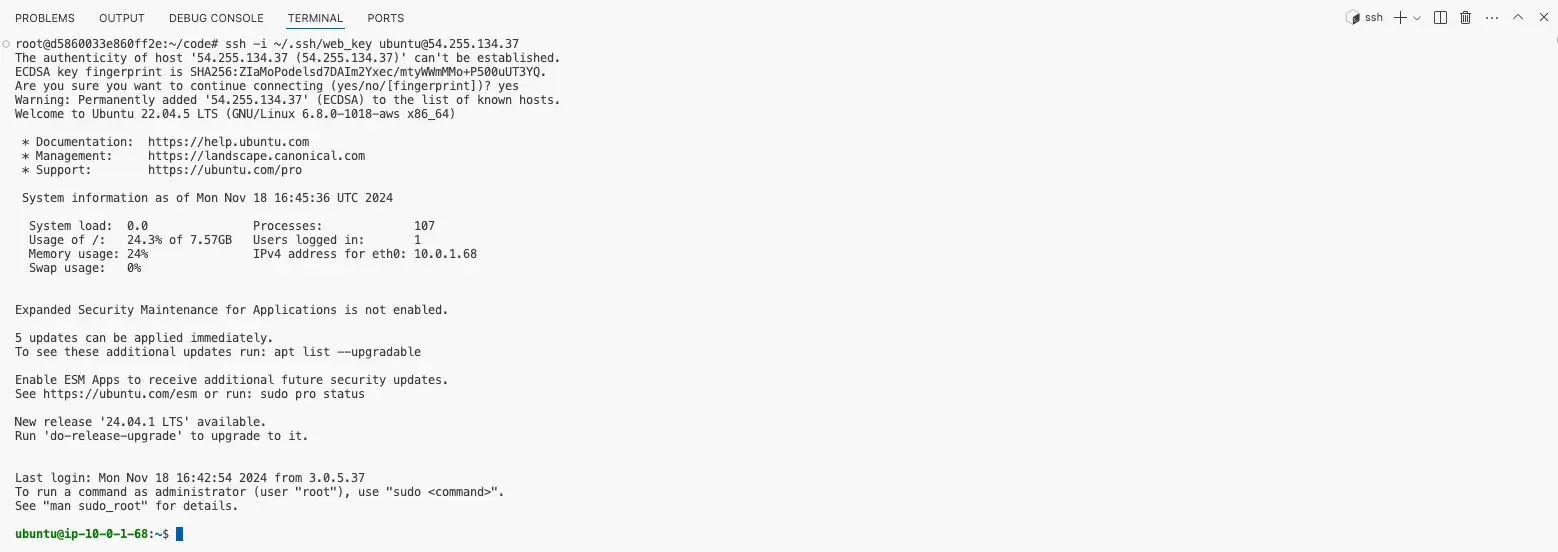

Login to public nginx EC2 instance via SSH

Here, we will check that we can access the public nginx EC2 instance via SSH.

ssh -i ~/.ssh/web_key ubuntu@<nginx-public-ip>

Copy ssh key to nginx EC2 instance for access to k3s master node

Here, we will copy the ssh key to the nginx EC2 instance for access to the k3s master node. But before access to the k3s master node, we need to update the permissions of the ssh key.

scp -i ~/.ssh/web_key ~/.ssh/web_key ubuntu@<nginx-public-ip>:~/

ssh -i ~/.ssh/web_key ubuntu@<nginx-public-ip>

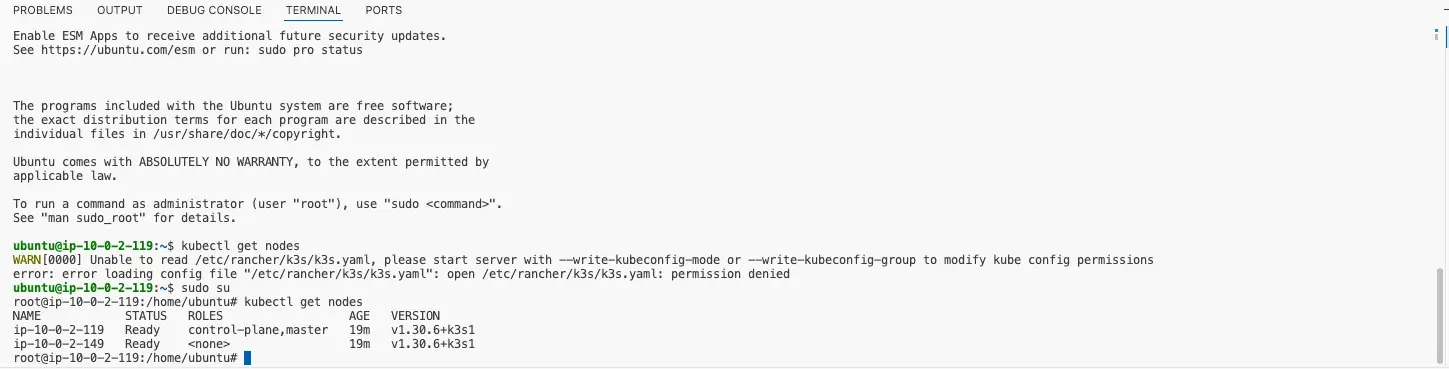

chmod 600 web_keyLogin to k3s master node via SSH and test kubectl commands

Here, we will login to the k3s master node via SSH and check the status of the k3s cluster using kubectl commands.

ssh -i web_key ubuntu@<k3s-master-private-ip>Check the status of k3s cluster

kubectl get nodes

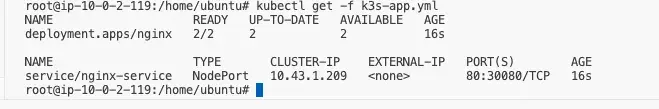

Check the status of POD and Service objects

kubectl get pods

kubectl get svc

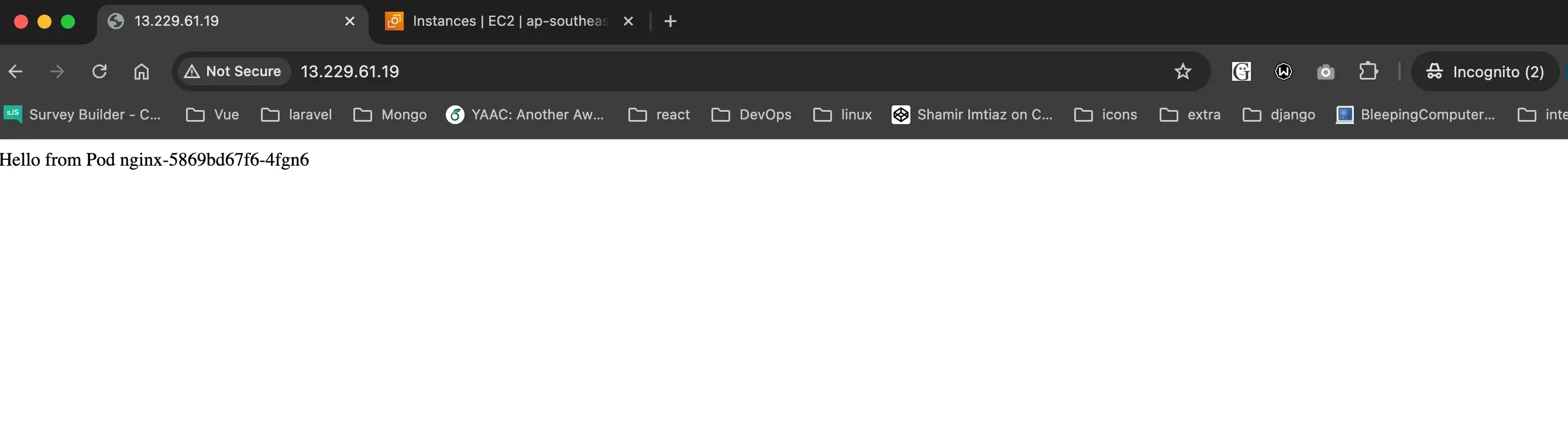

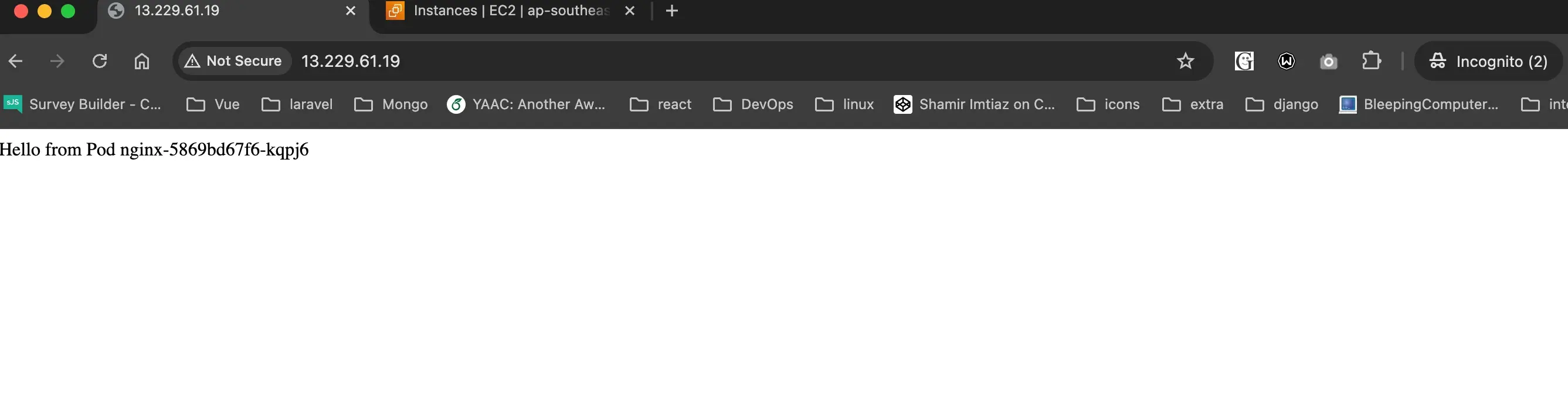

Access the NGINX load balancer using public IP

Here, we will access the NGINX load balancer using the public IP and check the response from the NGINX load balancer. We will see that the NGINX load balancer is routing the traffic to the PODs based on the nodePort value.

Destroy the Infrastructure

To destroy the infrastructure, run the following command:

terraform destroy -auto-approve